Today in Tedium: Exactly 26 years ago this week, the world got its first look at what it would look like for an Apple PowerBook to hack the computer system of an alien spaceship. Like some of the other scenes of Independence Day, it was heavily exaggerated, and the result it represented an infamous plot hole in a piece of pop culture that millions of people know and remember. It’s a key example of bad computer use of the kind that has dominated movies for 40 years. This week, I was watching a show on Hulu, The Bear, that appears to do an excellent job of realistically portraying the process of running a restaurant, and it got me thinking: What about technology makes it so hard to correctly display on screen like that, when it gets so many other walks of life so correct? And is there room to fix it? Today’s Tedium ponders how we might actually upload a virus to the aliens. — Ernie @ Tedium

Keep Us Moving! Tedium takes a lot of time to work on and snark wise about. If you want to help us out, we have a Patreon page where you can donate. Keep the issues coming!

We accept advertising, too! Check out this page to learn more.

This clip, while accurately displaying the scene in question, replaces the virus with a Rickroll. You have been warned.

What’s wrong with the alien virus upload scene from Independence Day, and is there any feasible way it could have worked?

In August of 1995, Apple released the PowerBook 5300, one of the first Apple machines to feature a PowerPC processor. It was not exactly the highlight of the PowerBook line, with infamous design faults and issues with manufacturing, including the potential for fires with the lithium-ion battery, a technology still new to laptops at the time.

But its feature set was notable, even if some Apple die-hards were left disappointed in some places (no CD-ROM option on this model, despite plenty of hot-swappable slots). This was a top-of-the-line machine for its era, even if it was compromised, and that made it a natural alien-crusher.

So how could David Levinson (Jeff Goldblum) upload a virus to a mothership that he had no previous experience accessing? After all, it’s not like the device had an ADB or modem connector anywhere. There was no guarantee that the aliens even used binary.

Let’s start with the file-distribution issue. Given the port selection of the device and Levinson’s usual equipment setup, he likely had two options for connection with the alien spaceship.

- The cellular phone. Attached to the back of the device, it was likely possible to use the phone as a modem, though odds are he didn’t have any signal in space, making what was likely an already shaky communication option a nonstarter. Didn’t help that there were no towers anywhere.

- The infrared communication port. This was the more-likely option for sharing data with an alien ship. This was a new feature to the PowerBook at the time, and made it possible to beam files to an external machine. As Macworld noted in 1998, the technology was quickly overshadowed by Airport, a technology that would had made this a slightly easier discussion. But blasting a virus through an infrared receiver seems like the best possible option given our choices.

OK, so odds are that David Levinson dropped the payload into the alien computer by blasting the data through an IR port on the back of the machine. Perfect. Surprised he had time to develop graphics for that use case.

But all that still leaves the problem of what to do about the fact that the communication system may not even speak in binary, which would make it impossible to program for, let alone write a virus.

The scene in which Levinson figures out how to upload the virus comes about six minutes into this clip of deleted scenes from ID4.

And it turns out that there’s actually a deleted scene that helps clarify this plot point. In 2016, upon the 20th anniversary of the release of the original movie, the filmmakers released a number of scenes that, among other things, portrayed Russell Casse (Randy Quaid) as a better father than final cut of the film did; that showed Levinson using his laptop to look up his ex-wife Constance Spano (Margaret Colin); and created a love interest for Alicia Casse (Lisa Jakub) with a character who isn’t otherwise seen in the final film.

But most importantly, the film shows that Levinson had figured out how to convert the pulsing data points created by the alien ships into binary, an important plot point for making the whole damn film work. All he had to do after that was program the system to accept the payload, while hoping that the payload was effective enough that it pushed the malware through the entire system of spaceships, helping to remove the shields.

You know, stuff that computers can do! Especially computers from 1996!

17"

The size of the monitor used in the movie WarGames, probably the most unrealistic looking part of what was generally a fairly authentic-looking movie computer setup that relied on the CP/M operating system. (While 17-inch CRT monitors were common later in the history of the computer, they weren’t widely used at the time WarGames came out.) The company Electrohome, while relatively unknown today, played an important role in the tech industry of the era, supplying the monitors that Atari and Sega used with their arcade machines in the 1970s and 1980s, according to CIO.

Five other notable examples of computers appearing in films and television in comical, unrealistic ways

1. The infamous NCIS two-person keyboard. It’s already unrealistic enough that the machine is just going off uncontrollably in this scene, but it takes a turn when NCIS Forensic Specialist Abby Sciuto (Pauley Perrette) gets a little help from NCIS Senior Field Agent Timothy McGee (Sean Murray). Mark Harmon’s grand solution to this problem? Unplug the monitor. Classic IT solution to a classic IT problem right there.

Now, to be clear, NCIS is a show clearly self-aware of its unrealistic computer use habits, releasing a supercut of people typing on its Facebook page to celebrate its 400th episode in 2020, in which this specific scene is prominently featured.

2. The tight-wire computing scene from Mission: Impossible.

One particularly effective way to ensure that the computing experience still kind of works even if it’s not at all realistic is to make it completely proprietary, which is the route the first Mission: Impossible movie (and numerous other Tom Cruise movies of this era, such as Minority Report) took. The machine being used in the Tom Cruise-hanging-from-a-wire scene relies on magneto-optical media for storage—a very uncommon format, even at the time—and leverages hardware that only kind of looks kind of like a modern computer in passing.

While the computer is key to this scene, the real tension is happening everywhere around the computer—which is why, even if the computer itself doesn’t feel at all realistic, the scene still kind of works.

3. The Pi symbol in The Net.

I’ve used a lot of computers in my 41 years of existence, and none of them have randomly had a Pi symbol just randomly hanging around in the corner, ready to push me into a secret computer system.

But the conceit of The Net, the 1995 cinematic introduction most of us got to the internet, is based around the idea that people did this, and by clicking the symbol (and pressing control-shift), some deep secrets would be revealed. Think of it as sort of a red-pill effect akin to The Matrix, but tied more strictly into some form of reality.

The Net is a cheesy, funny film that is very much of its time. But another element of the story proved more prescient than the Pi symbol—Sandra Bullock’s character ordering pizza online proved a direct inspiration to some of the earliest food delivery services.

4. Richard Pryor hacking a company’s payroll system in Superman 3.

While most of the tales of bad computer portrayals are understandably attached to the early GUI era, Richard Pryor turns out to have been ahead of his time on this front, with his character in this 1983 Superman sequel successfully hacking his company’s computer system.

Pryor’s August “Gus” Gorman used the fractions-of-cents strategy that the comparatively computer-accurate Office Space later became more famous for, but did so in a very comical way, simply asking to turn off all security by typing a very basic command, “override all security.” If modern computers worked like this, we would have no secrets.

Whenever we talk about the Superman sequels sucking, one can’t help but feel bad for Richard Donner.

5. Harrison Ford hacking an iPod Mini to use an image scanner from a fax machine in 2006’s Firewall.

Did anyone ever tell him about Disk Mode? This scene, from a late-period Ford film in which he plays the head of security for a regional bank who has been forced to steal from his employer, involves some fairly unrealistic hacking scenes, but this strangely unnecessary form of MacGyvering, in which the music player is used to steal $100 million dollars using some unusual hacking methods.

Ford’s character says the device won’t know the difference between “10,000 files and 10,000 songs,” which sure, whatever. But this is clearly the design choice of a man who was stuck in a plot point that could have been resolved if the movie came out a year later, when the iPhone was a thing.

Why can’t a show where people use computers feel more like The Bear?

Like a lot of people recently, I’ve been totally entranced with The Bear, a Hulu/FX show that focuses on a greasy-spoon restaurant in a working-class area of Chicago.

The best way to describe this show is as a “pressure cooker,” where things can blow up at a moment’s notice—but the tension, if carefully allowed to simmer, leads to amazing results. Everyone is stressed. Everyone is angry. But they make great food seemingly because of that tension.

It’s a freaking great show, and one that captures a specific experience in a fresh way.

I don’t know if you’ve ever used a computer in a high-pressure situation, but it can feel like this at times—say, you’re trying to hit a deadline, or you say something stupid on social media and suddenly your phone blows up. I’m sure if you’ve been trading crypto lately you’ve had Bear moments every other day for the past month.

A lot of people in a lot of industries do work that is acceptably displayed on screen. But computers aren’t one of those fields for some reason.

It’s telling that the most realistic uses of computers in film happened in romantic comedies like You’ve Got Mail, which used email basically as regular people use it. The more practical the use case, the easier the integration.

But think about a film like Independence Day or a show like NCIS. They have to explain a lot of complicated plot points in a matter of seconds, plot points that if you were using an actual real computer to explain, would fail to make clear sense to viewers. And in the case of a TV show, there’s no guarantee that the audio will even be playing; the visuals have to do a lot of heavy lifting.

So exaggeration is a necessary name of the game. I mean, if your computer actually gets a virus or suffers from a security hole, it may take you minutes or even hours to notice something is wrong. It doesn’t always announce itself.

In fact, a security exploit might work like Tom Cruise in Mission: Impossible—remaining as quiet as possible, doing everything it can to not appear visible in front of you until it’s too late and you’ve already been breached.

The problem is, movies and television shows don’t deal in subtlety. They have maybe a few seconds to get a plot point across, and computers are simply built for deeper experiences than that.

Life doesn’t move as fast as the plot line of a movie desires, so they kind of have to fudge it.

It’s likely that this trope of technology being shown in an incredibly fake way eventually evolved from an obvious misunderstanding of the tech to something closer to a blatant, knowing cue for viewers, a Wilhelm scream for computing nerds.

And there are signs that things are improving to some degree, possibly because technical knowledge has grown increasingly mainstream. Shows like Halt and Catch Fire and Mr. Robot have been lauded for actually understanding how computers work.

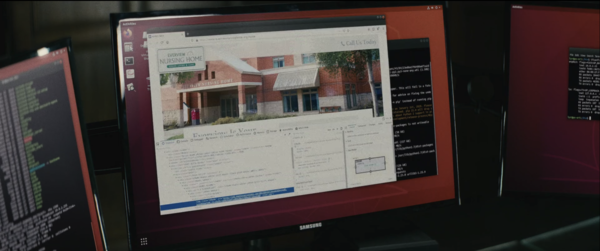

An actual mainstream movie accurately displaying an Ubuntu desktop in the year 2021. It can be done.

And even films that aren’t really about computers seem to understand the dynamic better these days. As I wrote last year, the Bob Odenkirk vehicle Nobody, which uses tech in a way not unlike any other action film, correctly portrayed Ubuntu in a scene in a way that looked at least reasonably like how it might have been used in the real world. Ubuntu!

Going back to The Bear and the authenticity it has that many shows about technology do not have, I think at some point, the reason for this might be simple: When it comes down to it, most people know how to chop an onion; most people don’t know how to hack the Gibson, so the room for creative liberties is certainly more robust than that of running a kitchen.

It just turns out that when you display using a computer incorrectly, it looks pretty comical to the bystander who actually knows what one does.

This week, Variety published a news story explaining how Winona Ryder, the lead in Stranger Things, had played an important role on the show that wasn’t necessarily part of her job description: Being a child of the ’80s, she has traditionally helped the Duffer Brothers, the show’s creators, with historical accuracy—an important element of a show that tries to recapture a very specific period.

“She knew all of these minute, tiny details they didn’t even know, and they had to change things in the script based on that…It’s just kind of epic how wild her mind is and how it goes to all these different corners,” explained David Harbour, a.k.a. Jim Hopper, in an interview with the outlet.

This is actually fairly accurate, considering. (via Amitopia)

And while the show very occasionally gets it wrong, it has been surprisingly solid when it comes to the technology part of all of this. Earlier this season, the show somewhat accurately displayed an Amiga 1000, one of the greatest computers to ever exist.

Was it perfect? No, but in a way that feels like perhaps they knew they were getting it sort of wrong. Nina, the long-distance girlfriend of Dustin, was programming in the C# language, a computer language that Microsoft released in the year 2000, 14 years after the events of this season. On top of that, the Amiga’s blue interface was replaced with a green-and-black theme for some reason, which made the screen look monochrome (although the screen is in color, as the cursor is brown).

This is way wronger than the Stranger Things Amiga is.

So, no, not perfect. But a hell of a lot closer than another Netflix film, Fear Street: 1994, got with an Amiga 2000. The machine mixed its computing eras to disastrous effect, putting a Windows 3.1 AOL screen on the Amiga, despite the fact that AOL never had a native Amiga version, the Amiga never ran Windows, and 5.25-inch floppies were not the medium Amigas generally used.

Perhaps some things will never change.

--

May your favorite show feature the right gadgets. Find this one an interesting read? Share it with a pal!